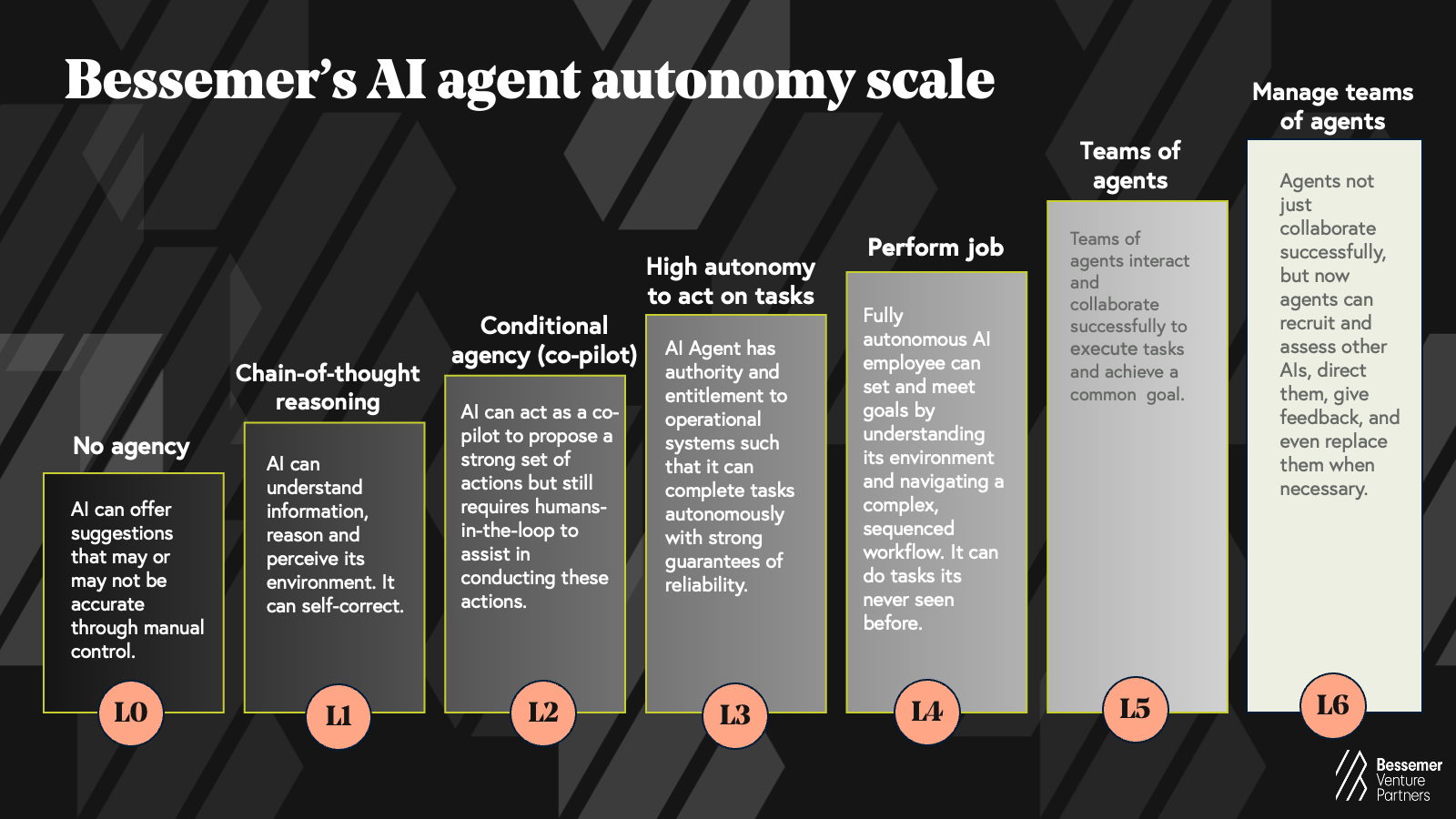

Bessemer’s AI agent autonomy scale—a new way to understand use case maturity

As we move rapidly toward an agent-driven workforce, we’re proposing a clear definition of an “agent” as well as a framework to measure progress.

AI agents are all the rage. And yet, while there’s constant bandying about the term “agent,” there doesn’t seem to be a clear consensus from those in the AI ecosystem on what this term actually means. We’ve seen everything from prompt-driven chatbots to workflow orchestrators referred to as agentic systems.

Technical builders gravitate toward Sutton and Barto’s proposed definition where agents are systems that “have explicit goals, can sense aspects of their environments, and can choose actions to influence their environments.” But this classic reinforcement learning definition often feels unsatisfactory to applied AI practitioners since it doesn’t fully capture the nuances that are fundamental for an AI agent to be useful in a real-world setting— including reliability, safety, observability, and efficiency.

What is Bessemer’s definition of an agent?

We define an AI agent as a software application of a foundation model that can execute chain-of-thought reasoning to take action on sequenced workflows accurately.

At Bessemer, we believe an agent is defined by these five criteria:

- Agents have a state or a tangible configuration, which can take the form of a foundation model or software application. Embedded here is also the concept of perception where the agent understands and observes its environment.

- Agents can demonstrate intelligence or logic. They display chain-of-thought reasoning for tasks like planning, reflection, learning, self-review, memory, etc.

- Agents can execute actions, such as deploying AI-generated code into production, or crafting and sending an email response. Bessemer portfolio company Anthropic’s Model Context Protocol has unlocked a new standard for agents to complete actions and leverage tool use in the real world.

- Agents are able to exhibit their agency on complex tasks that can form a dynamic or sequenced workflow.

- Agents can complete actions with strong guarantees of reliability and entitlements. (We use the term “entitlements” in a similar way to how it’s used in cybersecurity—having identity defined by access privileges. The agent knows very clearly who it’s acting on behalf of and has the corresponding credentials to take action.)

What is the Bessemer AI agent autonomy scale?

As investors, we’re often asked to provide a perspective on which industries or functions have already deployed or soon will deploy AI agents at scale. This led us to develop a framework—inspired by methods from the self-driving car industry—to help us compare the state of AI agent readiness across industries as well as within a vertical by looking at the maturity of use cases.

|

Level |

Description |

Example Use Case |

|

L0 |

No agency; manual or rules-driven systems |

Developer prompts LLM via chat |

|

L1 |

Chain-of-thought reasoning; self-review, logic traceability |

AI provides contextual code suggestions, self-critiques |

|

L2 |

Conditional agency (co-pilot); human-in-the-loop |

Code suggestions in integrated development environment (IDE), human approves code deployment |

|

L3 |

High autonomy; agent acts with strong reliability guarantees |

Agent autonomously deploys code |

|

L4 |

Fully autonomous; performs entire jobs |

AI functions as a software engineer |

|

L5 |

Teams of agents collaborate |

AI agents operate as a coordinated engineering SWAT team |

|

L6 |

Agents manage other agents; meta-coordination |

AI product/engineering manager orchestrates agent team |

L0: No agency

We don’t believe manually-controlled AI systems (such as prompting, rules-based interfaces, or low-code no-code automations) demonstrate the criteria to be considered agents.

L1: Chain-of-thought reasoning

Agents need to display intelligence in order to truly provide guarantees of reliability. Chain-of-thought reasoning unlocks this, allowing agents to reason over context and self-review, just as a human would review their own work before submitting. Furthermore, from an applied AI standpoint, chain-of-thought provides visibility and traceability for humans to monitor “correctness.”

We expect that foundation models will increasingly subsume the chain-of-thought reasoning we see today in agentic applications. However, we expect that chain-of-thought will still be required at the application level to provide more control and visibility of the intermediate steps, especially in regulated industries.

L2: Conditional agency as co-pilot

The distinction at this level is that the AI can not only provide relevant information, but it can also digest information accurately and perceive its environment to propose actions. However, a human-in-the-loop is still the pilot and the agent requires manual review of its proposal and/or need authorization from a human to operationalize actions.

L3: High autonomy to act on tasks

AI agent has authority and entitlement to operational systems so that it can complete tasks autonomously with strong guarantees of reliability.

L4: Perform job

Fully autonomous AI employees can set and meet goals by understanding their environment and navigating complex, sequenced workflows. They can complete tasks they’ve never seen before.

L5: Teams of agents

Agents can interact and collaborate successfully by sharing context and executing tasks in concert to achieve a common goal.

L6: Manage teams of agents

Agents not just collaborate successfully, but now agents can recruit and assess other AIs, direct them, give feedback, and even replace them when necessary.

What’s the outlook for agentic workforces?

The potential for AI to function as full employees and even teams of employees is vast. But it doesn’t end there. Beyond the L6 frontier, we like to draw upon Marvin Minsky’s ’society of mind’ theory to understand the unlimited potential for economically viable work to be transformed as AI agents transcend individuals or teams, and begin organizing themselves into scaled hives and even full companies:

“The mind is not a single, unified entity, but rather a ‘society’ of simpler processes—called ‘agents’—working together to create what we experience as thought, consciousness, and intelligence.”

In time, we believe that AI will improve and even automate every information job done by human beings, including ours as investors. This will bring massive transformation both on the enterprise and customer side, not just fulfilling AI’s productivity promise by enabling humans to be more efficient at work, but possibly causing an evolution of the workforce toward an agentic majority.

If you’re not sold on the imminent possibilities of artificial intelligence, we’d invite you to consider that this might be an illusion.

While it’s natural for humans to feel a level of resistance or fear with the possible risks that new AI technologies pose, we often underestimate the positive impact AI agents may have on people’s lives and the economy. The aim is to not be “intelusional,” but rather intentional on how we leverage the potential of AI.

Bessemer’s AI agent autonomy scale was developed by partners at Bessemer Venture Partners — Talia Goldberg, David Cowan, Janelle Teng, and Sameer Dholakia. Talia is known for her expertise in enterprise and AI-driven technologies. David is a founding partner at Bessemer and a leading voice in technological innovation and venture capital. Janelle specializes in AI and enterprise software. Sameer brings deep experience as a technology executive and investor. Collectively, they offer diverse perspectives and deep expertise on the evolving landscape of AI agents and their impact on the future of work.