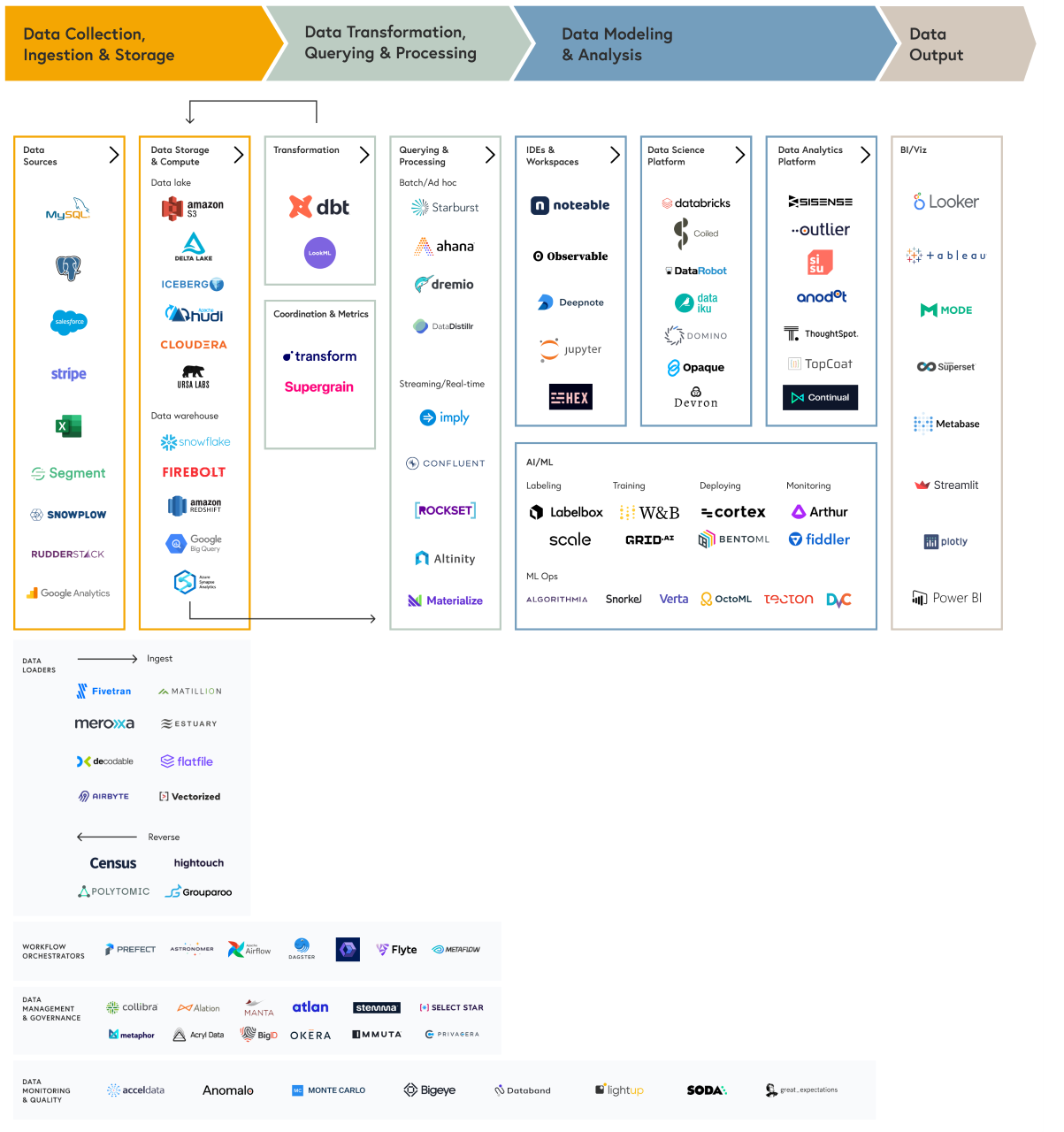

Roadmap: Data Infrastructure

The modern cloud data stack is undergoing massive construction and the future of software will be defined by the accessibility and use of data.

The success of a modern business, from small-to-medium companies to Fortune 500 enterprises, is increasingly tied to the ability to glean valuable insights or to drive superior user experiences from its data. As the technical barriers to making use of data have gone down in recent years, organizations have realized that data infrastructure is fundamentally tied to accelerating product innovation, optimizing financial management, surfacing customer insights, and building flexible and nimble operations to outmaneuver the competitive landscape. After all, global organizational spending on infrastructure has topped more than $180 billion and continues to grow every year.

At Bessemer, we have had two active roadmaps — Developer Platforms and Open Source Software — that have driven a number of investments within the data infrastructure world. Just as we have seen the developer economy take center stage and transform the way businesses operate with cloud-enabled technologies and even empower non-developers to extend their capabilities, we believe the technology underpinning data is undergoing a similar type of evolution.

One thing is clear — a wave of startups enabling the next generation of data-driven businesses is on the rise, as they offer better and easier-to-use infrastructure for accessing, analyzing, and furthering the use of data.

In working with these types of entrepreneurs for more than a decade, and seeing their companies grow rapidly, we have learned that data infrastructure platforms have distinct go-to-market strategies and buyers independent of developer platforms and open-source software.

This guide is a starting point for our investments in the data stack as a separate category. There are multiple massive businesses that have already been developed and others waiting to be started by the right founders as every role is empowered through the new, accessible, modern data stack.

Driving market trends

So why do we believe data infrastructure is on the brink of transformation? We see four major trends driving the evolution of the modern cloud data stack:

1. Growth in adoption of cloud software

As companies in all industries and sizes adopt various cloud-based software to run their businesses, they have had to deal with data sprawl across a number of different sources and systems. The widespread adoption of the cloud promotes cloud-based data stores, workflow, and analytics. As data workflows move to the cloud, more companies are able to build flexible architectures for working with their data. Cloud-based data warehouses are the foundation for these architectures, and over the past few years the technology underpinning these data warehouses has drastically improved. Startups are able to take advantage of flexible architectures, built around and on top of cloud data warehouses such as Snowflake and Firebolt, to build modular, cloud-based products to service the needs of enterprises.

2. Increase in the volume of accessible data

Movement to the cloud and the growth of software users around the world has also generated more data at an exponential rate. According to a study by Splunk, most businesses and IT managers believe that the volume of accessible data will increase by more than five times in the next five years. As a result of this shift, enterprises now need flexible and seamless connections with various data sources such as databases, SaaS apps, and web applications, spinning up new sources as the number of systems they use to operate their businesses expands in the digital realm.

3. Data becomes a differentiator

If software has been eating the world, data is the fuel for the machine. Airbnb, Netflix, and other large companies have invested heavily in their data stacks to serve not only personalized content but also to help with dynamic and automated decision-making. As companies seek to leverage data as a differentiator, each of the functional departments within those companies must gain access to data to make data-informed decisions. Users across product, marketing, finance and operations are all demanding easy access to pertinent data to inform key decisions.

4. Demand for talent and sophistication in leveraging data

As companies shift from on-premise to cloud-based architectures, we’re witnessing the rapid maturation of roles like data scientists, data engineers, and machine learning engineers, who work with data in these cloud ecosystems. In conjunction, the demand for data professionals has risen dramatically over the past few years. Paired with a relatively steady rate of new professionals educated in data infrastructure, there’s a data talent gap where there aren’t enough professionals to fill the demand for these roles. This is further driving the demand for software in the data infrastructure space that can help with automating key tasks, as organizations search for solutions to the data talent shortage. Today’s data professionals are constantly testing the limits of existing software solutions as they look to solve ever more complex data problems for their organizations.

Additionally, there’s a massive change in which roles can access and work with data. With faster product cycles and the ability to sell more modular cloud software, we are seeing startups powered by data arrive to supercharge marketing, sales, finance and product roles in all industries from tech to retail to transportation.

These massive shifts have required reliable, easy-to-use products and solutions to empower data teams to work better and produce insights more rapidly. In the past, an enterprise data team may have been an afterthought, relegated to using an older, monolithic solution like Informatica. As data teams command more respect and deliver greater ROI for companies, the need for a new, modern data stack has emerged. Best-in-breed, more specialized tools across the value chain are replacing and augmenting each core function of a monolithic IT infrastructure.

While the data stack has evolved in considerable ways, teams are at different points in their evolution towards having a more modern architecture -- with some just starting to leverage data, and others becoming more mature in their usage of data. We believe most enterprises are currently what Snowplow Analytics calls “data adept,” understanding that they need to at least produce analytics to understand their businesses and users. Some at the cutting edge are even moving into utilizing data in real-time for both analytical as well as operational use cases. As we move towards a world where enterprises are driving strategy based on their data, there’s a proliferation of new data roles joining the job market.

A rising number of newcomers to the data infrastructure space are using data analysts that do entry-level data utilization for use cases like producing dashboards to understand the basic operating metrics of a business. They tend to work in Excel or SQL to create more historical, backward-looking analysis. Data scientists build more sophisticated models in both a historical and predictive way. For example, they will often use a more technical language like Python to predict churn or do marketing attribution. Both of these roles rely on data engineers to build and maintain the infrastructure to utilize data. This is a role similar to what platform engineers or architects are to developers in helping make the producers of analysis more productive. Maturing organizations are increasingly testing out use cases for more advanced data science, as well as standardizing processes around the usage of data including compliance and governance. Lastly, we see a cohort of companies that are very sophisticated in their usage of data becoming more common. These teams are creating roles like “ML Engineer” to refer to specialized data scientists that are able to operationalize machine learning in some key areas, and are employing multiple teams of data professionals including these more operational data scientists as well as data engineers.

Our theses on the data ecosystem

As we have talked to founders and data teams navigating the massive transitions happening here, we have identified a few key theses we are particularly excited about investing behind:

1. Data scientists are driving decisions

As the volume of data grows, the business users working with and creating analyses will be empowered similarly to how software developers were over the past decade. We are excited about products that help data scientists increase their efficacy and efficiency in their jobs. This includes products that support them in transforming data to fit their needs, building sophisticated models, and extracting meaningful insights. There is no better example of this than our portfolio company Coiled, founded by the creators of open source project Dask. Dask enables data teams to parallelize their workflows in the tools and environments they already know and love in Python. While universities and enterprises have adopted Dask and run it themselves, no data scientist wants to be a DevOps engineer and run their own compute cluster. Coiled Cloud manages all of that for you, in a secure, cloud-agnostic way.

2. Abstracting complexity away from data engineering

Despite the growing awareness of how important data is to their businesses, there is still significant friction in organizations to leverage data for their needs. Data scientists and business users will often wait days to weeks for data engineers to build the right pipelines to be able to operationalize their data. Even when they are developed, they can often break or turn out to be less scalable than initially thought. We’re excited about those products that help to streamline data workflows, enable real-time data integrations, and establish a coordination layer to deliver data to the tools used by employees in various functional departments. Today, most data teams utilize Airflow to orchestrate their workflows but their instances are often self-hosted and incredibly brittle and break often which causes data scientists to troubleshoot for hours or days. Prefect in our portfolio aims to be the new standard for dataflow automation to build, run, and monitor millions of data workflows and pipelines. Companies such as Thirty Madison, Figma, FabFitFun, Capital One, Washington Nationals, and Progressive use Prefect to power their data analyses, machine learning models, and overall business processes.

3. Data governance, monitoring and observability

As the number of data sources has increased dramatically and the ability to store it has become cheaper via data warehouses, companies have been faced with a morass of data with unclear origins. New regulation and recent data leaks have forced companies to institute more granular management and governance. Bessemer has a roadmap devoted specifically to data privacy but we are bullish on the broader category, including governance, monitoring, and observability to ensure quality of and trust in data as it moves throughout an organization. For example, as data grows in scale and complexity, tracing its flow across the organization is becoming increasingly difficult. It’s a big reason why we invested in Manta, a leader in the data lineage market. Their product connects to a company’s infrastructure to track the flow of data within an organization. By providing complete visibility into the flow of data, the transformations each data point undergoes, and the complex web of inter-dependencies between datasets, Manta enables large data modernization projects, while also granting data scientists and engineers the ability to make changes to their schemas and investigate incidents without the fear of unforeseen effects.

4. The next wave of BI & data analytics software

While there are several notable examples of large successful companies in this space that serve data analysts and the broader employee base of companies, there is a movement towards delivering dynamic/real-time, automated, and highly relevant insights that we’re eager to explore further. Many of these platforms will be highly specific to either a functional area or industry to enable less tech-fluent users to make sense of their data in a business context. For example, Imply Data provides real-time analytics for enterprises built on top of Apache Druid, which is a widely-adopted open source OLAP database used to power low latency analytics applications. As the leading OLAP database, Druid is able to execute rapid high-volume query capabilities, which is something that traditional data warehouses do not do as well. On top of Druid’s strong technical offering, Imply also has developed proprietary features including its Pivot product which allows users to easily create visualizations for analysis and reporting without needing to also use Looker or Tableau.

5. Infrastructure to accelerate the adoption of machine learning

More and more companies are starting to incorporate machine learning into their production environments, but candidly we are still early in broad market adoption. Nonetheless, we are excited by the potential of MLOps tools that provide the basic infrastructure needed to get more models into production. By removing the need for homegrown solutions or manual processes, the focus can be on developing models for business impact rather than dealing with infrastructure issues. Companies such as OctoML have built products that help engineers to get their models into production with optimal performance. Built on top of open-source compiler Apache TVM, OctoML’s SaaS offering includes the optimization, benchmarking, and packaging steps critical to any production deployment, regardless of the CPUs, GPUs, or specialized accelerators they run on.

Our guiding principles on how we will invest

Across all of our theses are a set of principles or characteristics that we’ve identified as being especially prevalent in the most successful data infrastructure companies, and that we look for in new investment opportunities.

1. Ecosystem partnerships & integrations

In modern cloud environments, integrations across tools either natively, via APIs, or with third-party products like Zapier are important for enhancing the usability of a particular piece of software. For data infrastructure, such interoperability is a necessity. Data moves through pipelines from source to output, interacting with and being manipulated by a range of tools in the data stack. New infrastructure providers need to seamlessly work with the predominant tools a company is adopting or already uses if they are to achieve any real adoption. Relatedly, initial traction for data infrastructure companies is often determined by whether the company is a close partner with best-of-breed platforms such as Snowflake, BigID, and Databricks in addition to product integrations. There is perhaps no company more representative of the effectiveness of this principle than Fivetran. With Snowflake’s rise to prominence as the warehouse of choice for the most forward-thinking data teams, George Fraser and the Fivetran team built a product well-suited for that ecosystem and invested behind selling alongside them, becoming the preferred partner for any Snowflake customer looking to push data from source into the warehouse. We are seeing this dynamic play out in the “reverse ETL” space as well with companies such as Hightouch and Census pursuing similar strategies to benefit both their partners and their ultimate end customers with highly interoperable solutions in what is becoming the “canonical data stack.”

2. Community leadership

The best companies sometimes start with strong bottoms up adoption, typically through “data mavens” that have strong ties to an open source ecosystem or data infrastructure community. dbt (data build tool) (a.k.a. Fishtown Analytics), for example, is one of the fastest-growing data infrastructure companies. dbt launched as an open source project that allows data scientists to transform data in their Snowflake data warehouse (or other cloud data warehouses) while utilizing SQL rather than having to shift into Python or another language. dbt leveraged the pre-existing community of SQL users with high intent for a product that allowed them to stay in their native SQL to perform data science workflows. dbt also worked hard to create a community for data scientists around their product to not only educate them on the project and its value, but also create a space for learning the best practices of data science. In the siloed world of data infrastructure, introducing a new tool often means not only working closely with existing communities but also fostering new communities around projects and philosophies of how to work with data.

3. Removes friction in the daily workflow

Products that rip and replace tools that support existing workflows, whether legacy or homegrown, tend to provide the greatest value and are the offerings for which customers are most eager to make third-party purchase decisions. In particular, companies are eager to outsource non-core tasks, whether that’s connecting data sources, scheduling workflows, or setting up infrastructure and scaling workloads to run models. As with developer platforms, removing a non-core task for a business analyst or data scientist allows them to focus on the clear value driver for the business—producing great analyses and models for the business. One great example is the data science workbook Noteable which supercharges Jupyter notebooks with enterprise-grade collaboration, security features, and SLAs. Born out of Netflix, Noteable removes the friction associated with working with data for data scientists by allowing them to utilize the notebooks they already know and love without needing to manage or worry about the infrastructure behind them. Products like these tend to have an easier time monetizing either through their initial product, or by using their position in the workflow to monetize future product offerings.

4. Easing interaction/collaboration between roles

Data teams value tools that simplify and enable smooth and productive collaboration. There is often friction in the modern data stack when data engineers hand off processes in the data pipeline to data scientists or data scientists request specific sets of data from data engineers. Often interaction between roles requires engaging in time consuming data pulls and building pipeline infrastructure that allows different data frameworks and formats to talk to one another. Transform Data is one such company that enables smoother collaboration by providing a shared interface where companies can define their most critical metrics and data definitions across teams. This coordination layer helps teams save time that would be spent searching for data or fixing errors, and instead enables companies to focus on running experiments that improve business performance while having a common understanding of the input and output data involved.

What’s next

We believe we are still in the very early days of a revolution in the data stack. Just as the cloud changed the way we work today, harnessing data through modern cloud-native infrastructure is becoming essential to companies of all sizes and industries. Additionally, as modern data stacks become more widely adopted, we expect to see numerous areas for further enhancement, including streaming data to allow companies to take real-time action, and automated data workflows for specific industries or functional verticals.

We are eager to partner with founders enabling employees, teams, and enterprises to take advantage of their data to power their businesses and user experiences. We will be sharing more of our thoughts in subsequent articles on each of our working theses, but if you are working on a data infrastructure startup or work for a company enabling this transformation, please don’t hesitate to reach out to Ethan Kurzweil, Hansae Catlett, Sakib Dadi, and Alexandra Sukin by emailing us at datainfra@bvp.com.

Notable credits and sources:

-

Rudderstack

-

DevBizOps

-

Ark-Invest