The autonomous robotics future is around the corner

A world where robots can learn, act, and help humans autonomously has been years in the making.

Intuitive Surgical’s robots helped perform 1.6 million surgeries last year; often procedures that would have been impossible for a surgeon alone to perform safely. That’s a staggering number until you consider the fact that it accounts for less than 0.5% of surgeries performed globally. Robotics is an exciting field that holds the potential to unlock substantial value for society, but it’s still a field that is arguably in its infancy.

Today, vertical markets like manufacturing, logistics, and medicine deploy robots to sharpen and hasten their workflows––but they require either human control or programming to follow pre-defined rules in order to operate. Think of pick-and-place robots in factories, or even Intuitive's DaVinci doctor-controlled surgical robot. These robots excel at replacing repetitive human tasks, improving output while saving time and costs.

In the last several years, we've seen the very early adoption of autonomous robots––robots that can perceive their surroundings and act without human input. Autonomous robotics is still a niche field, but we believe that its emergence will expand the entire robotics market in the coming years as it enables higher-value use cases.

In the wake of artificial intelligence’s (AI) watershed 2023, we believe autonomous robotics is set to have its moment soon, too. Developers are applying AI technology, like multi-modal models and large language models (LLMs), to solving challenging robotics problems, such as perception, path planning, and motion control. In this deep dive, we explain why now is the time to start paying attention to autonomous robotics, including recent market developments and growth opportunities ahead.

Why is autonomous robotics relevant now?

Historically, robotics development required significant investments in time and capital just to build prototypes. But recent technological advancements, such as generative artificial intelligence (AI) and neural networks, have accelerated key phases in the development timeline. Today, robotics has one of the highest NASA Technological Readiness Levels (TRL) across deep tech—near-final products are passing tests and approaching commercialization. (Think Cruise’s driverless car service in San Francisco, which became publicly available in 2022.) Now, many are asking the question, “Can autonomous robotics companies become great businesses?”

Unlike software, it takes a lot of sophisticated inputs to train autonomous robots—more than code alone. Autonomous robotics learn how to operate in two primary ways: through cameras and computer vision, and deep learning and generative AI.

Building blocks of autonomous robotics

1. Cameras and computer vision

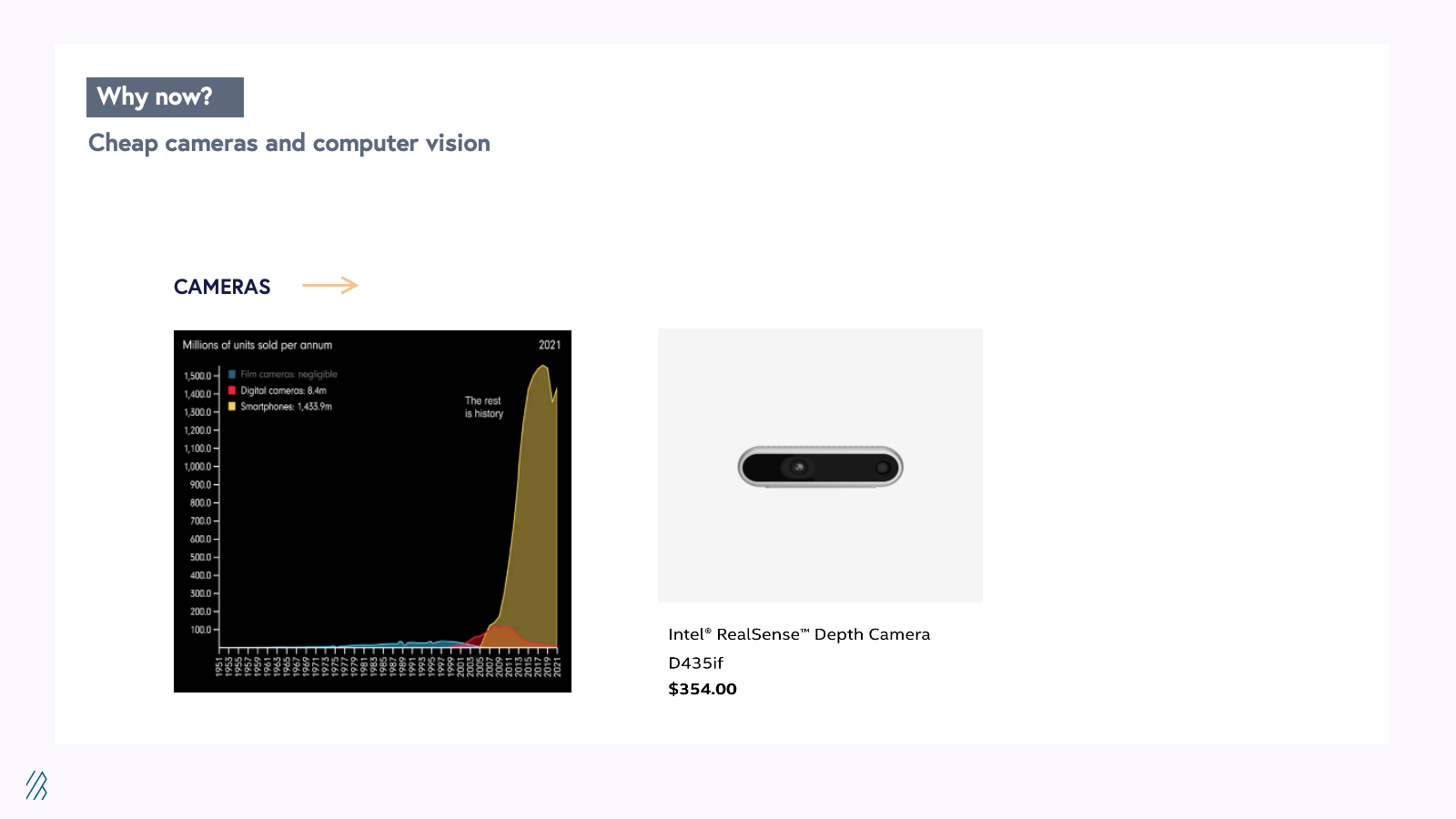

To perceive their environment, autonomous robots rely on cameras and computer vision technology. In the future we think autonomous robots will use a range of sensors including Lidar, Radar, Sonar, and other wavelengths of the electromagnetic spectrum to perceive the world in a way that is impossible for humans. But most of today’s sensors other than a camera are cost-prohibitive for most applications. High quality cameras, however, have become inexpensive thanks to 20 years of usage in smartphones. Smartphone adoption, and the associated mass production of cameras, has driven substantial improvements in the performance per dollar of cameras in a manner that has not occurred with other sensors. Cameras typically also use passive sensor technology, which is less expensive to manufacture than active sensors such as LiDAR or RADAR. Active sensors typically bounce a wavelength of light off a target and measure the time it takes for the beam to return to the sensor. This often requires mechanical components, such as the rotating LiDAR sensor seen atop Google’s autonomous vehicles, which are more expensive to manufacture at scale than solid state devices.

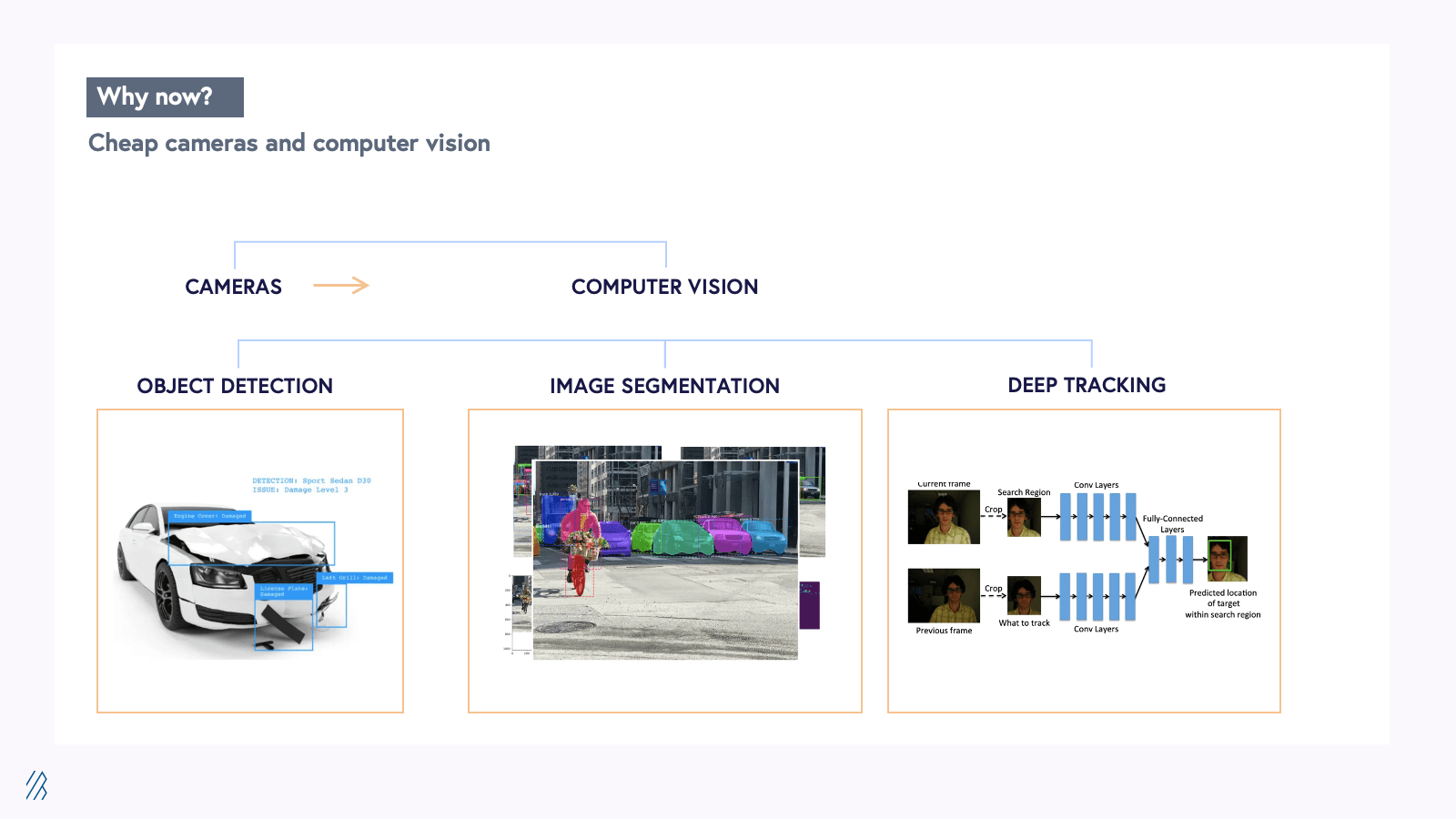

Equipped with cameras to help them see, robots just need an extra push to help them process what they’re seeing. Computer vision technology allows robots to segment objects in one plane and track them as they move across different planes. In other words, it helps them recognize what they’re seeing over time and understand the world as humans do.

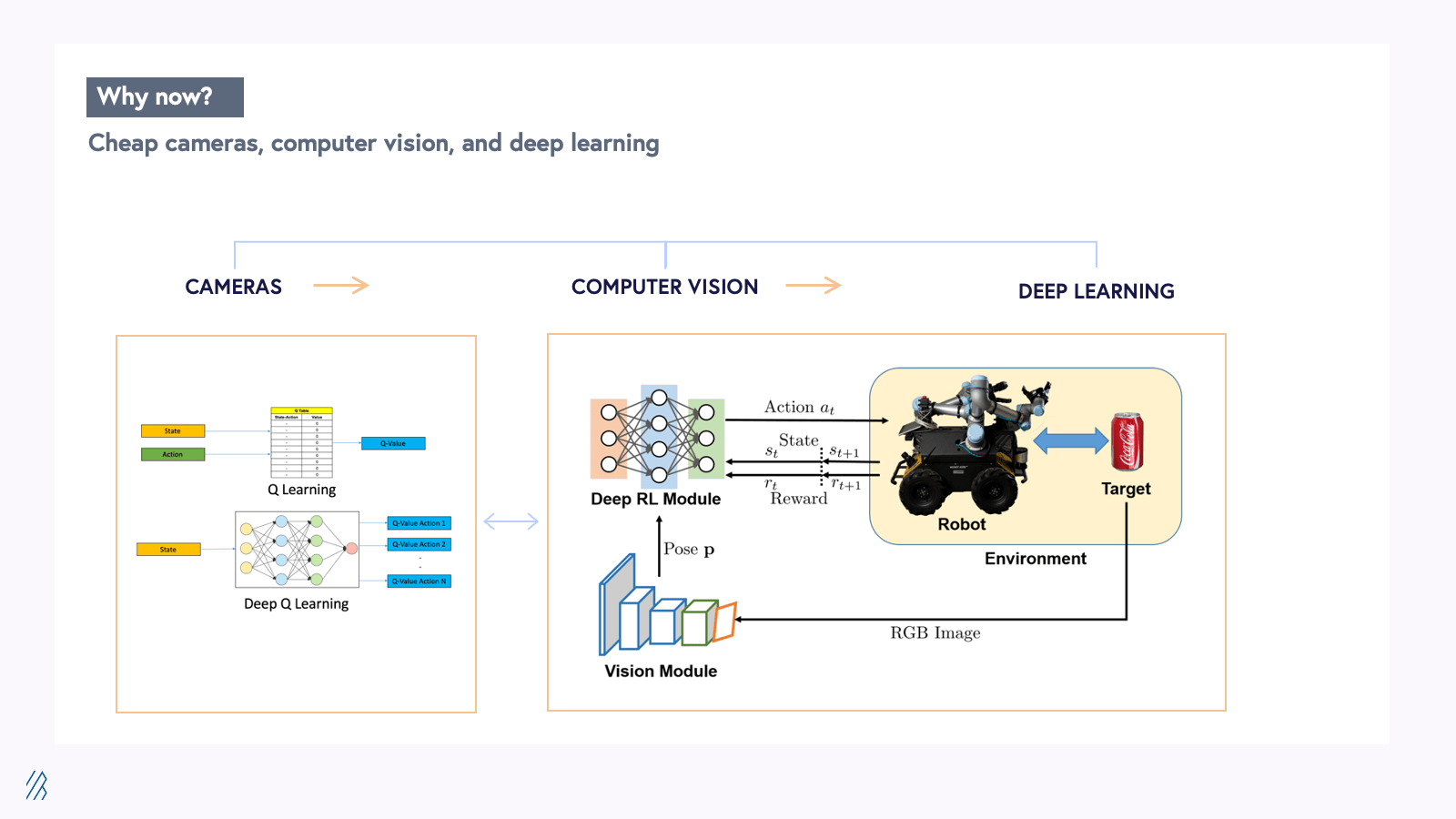

2. Deep learning and generative AI

To gain autonomy, robots need to be able to learn over time, and eventually gain the ability to make decisions on their own. Deep learning, which is enabled by neural networks, allows robots to make sense of what they’re seeing and learn from the commands they’re given. Furthermore, recent innovations such as Google DeepMind’s Robotic Transformer 2 (RT-2) now enable a vision-language-action paradigm whereby robots can ingest camera images, interpret the objects in a scene, and directly predict actions for the robot to perform. Each task requires understanding visual-semantic concepts and the ability to perform robotic control to operate on these concepts, such as “chop the celery” or “clean up the mess”. This has the potential to reduce the friction between the human-machine interface by allowing humans to direct autonomous robots using natural language rather than esoteric robot programming languages.

With technological maturity in cameras, computer vision, and deep learning, we get a robot that can perceive, understand, and learn from its environment; a robot that’s autonomous. Generative AI removes the friction for human-robot interactions, making communication as easy as speaking to a colleague or friend.

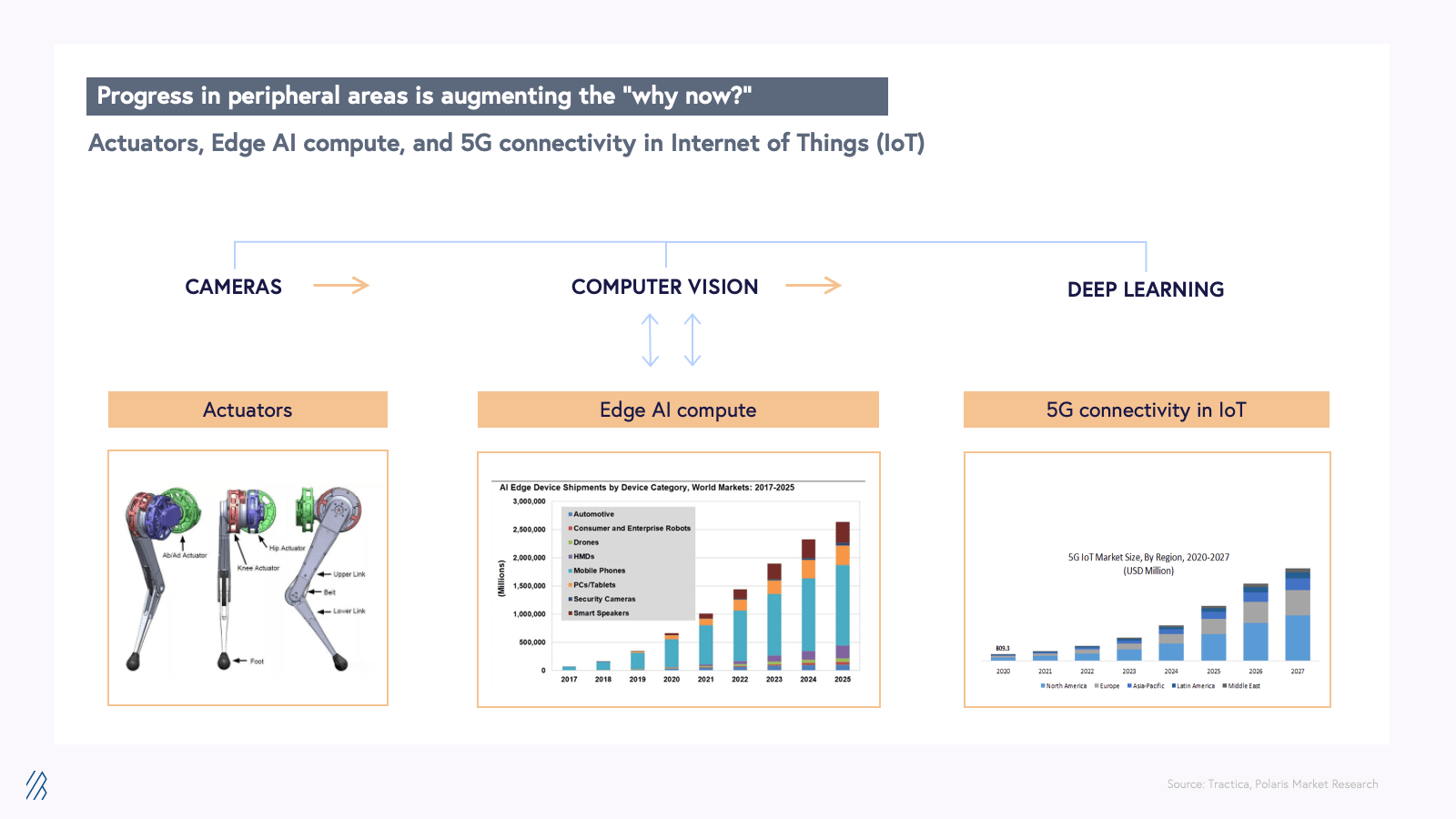

Progress in a number of peripheral areas has also accelerated innovation where autonomous robotics is concerned:

- Actuators: Historically one of the weak links for robot design, actuators must be safe, durable, power and cost efficient while also generating high levels of torque output and density. The dexterous hardware acts much like a human joint, giving robots the ability to move fluidly. This is one of the weak links for robot design because actuators must be safe, durable, power and cost efficient while also generating high levels of torque output and density. Companies such as ANYbotics are working on some exciting innovations in this area.

- Edge AI compute: Advances in edge computing means that robots will be able to process more powerful computations at the source, delivering quicker and more secure results compared to cloud-based robotic systems where data is sent elsewhere and analyzed. This enables a swath of real-time and high-security use cases that weren’t previously possible.

- 5G connectivity: Fleets of robots can connect to and learn from one another using 5G, even in areas where WiFi signals are weak. Fleet learning enables learning at scale to unlock an exponential increase in robotic capabilities. Advances in eSIM/iSIM standards and chip availability mean 5G cellular connectivity will become a viable option for many use cases where power usage had previously made it impractical.

Our prediction: autonomous robotics is on its way to help humanity

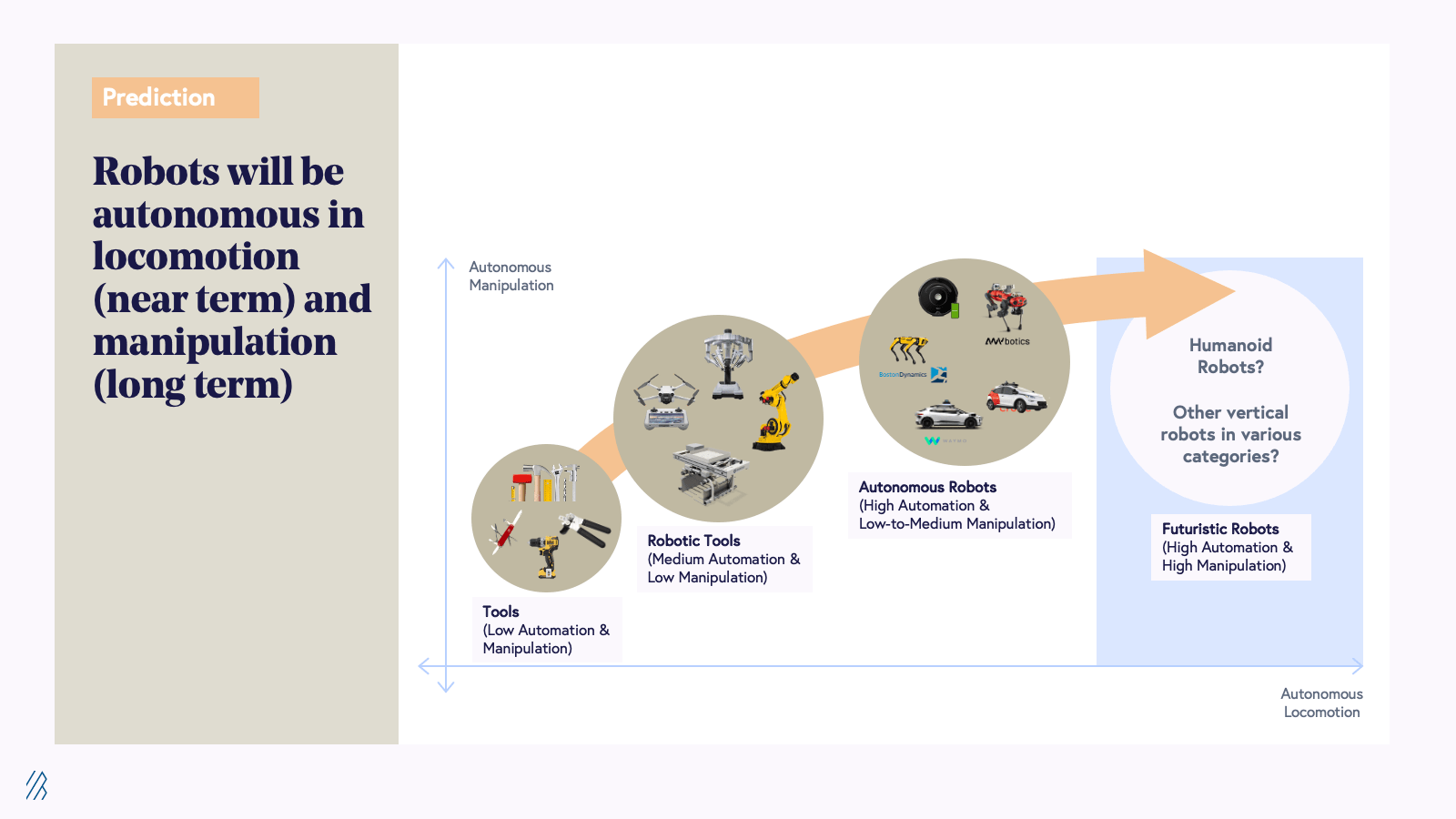

When we talk about autonomous robotics, there are two ways in which we see that autonomy emerge: autonomous locomotion and autonomous manipulation.

Autonomous locomotion enables a robot to go from point A to point B on its own (think of the Cruise example from earlier). Conversely, autonomous manipulation is where we see the potential for robots that not only impact productivity, but actually protect — and in some cases enhance — human life.

This kind of autonomy enables robots to do human-like, dexterous tasks on their own, such as pushing things, opening doors, or taking samples.

Having robots perform tasks in high risk environments, such as oil rigs and nuclear plants, would take humans out of inherently dangerous work environments, not to mention preventing a number of tragic casualties. Companies like Figure, 1X Robotics, and Tesla are already building and testing humanoid robots that can perform mundane and unsafe tasks.

As companies seek to increase robots’ autonomy and rely less on remote operation, product developers will need to train models on egocentric datasets—visual data from the first-person perspective. New AR/VR products like the Apple Vision Pro offer the potential to accelerate the creation of this training data.

We predict that autonomous and remote manipulation will change a number of industries, including:

Medical robotics and automation

Medical robots assist surgeons with non-invasive surgeries, improving surgical processes, surgeon ergonomics, and patient outcomes.

In the future, artificial intelligence (AI) and machine learning (ML) will improve surgical performance and patient outcomes. One day we can expect to see these robots perform surgeries on their own, using deep learning to execute procedures well.

Warehouse and fulfillment robotics and automation

E-commerce fulfillment situations, especially in warehouses, accelerate fulfillment with the use of roboticsWith the addition of 3-D vision and 5G enablement, robotics will be able to take more more complex tasks within decision-heavy scenarios.

Construction, cleaning, and inspection

Robots are leveraged to inspect work sites (using aerial drones), automate repetitive processes, aid in welding; injection; and finishing, and help clean work sites (Roomba and ECOVACS come to mind).

As robots get smarter, they’ll be deployed in hazardous industrial environments where humans can’t safely go, and can even guard them with security automation. They’ll also contribute to efficiency improvements with tasks like painting, coating, and inspection of machinery.

Human companionship

This one might feel a bit more sci-fi than the others, but it’s also one of the most exciting future use cases to imagine. As robots gain intelligence and dexterity, they could be available for elderly and disabled people who need help living independently. Having a robot aid in meal preparation, cleaning, and personal hygiene — and even friendship — would make a huge difference for those that are struggling.

Military drones

Uncrewed aerial vehicles (AEVs) can provide militaries with a competitive advantage in warfare. Companies like Anduril are not only building autonomous drones for military use, but also autonomous vehicles that can intercept and destroy other drones.

Hurdles to overcome

Of course, just like any emerging field, the development of robotics has seen its fair share of roadblocks and challenges preventing wider commercialization. We’re actively looking for companies that are well-versed in the following challenges, and looking for innovative ways to overcome them.

- Hardware-related execution challenges: Robotics technology needs hardware, and hardware is at the mercy of a long supply chain. Coordinating these (quite literally) moving parts increases complexity once production is outsourced. Executing this well is extremely important, and it’s also difficult to pull off.

- Capital intensity: Robotics companies need inventory. This results in inventory build-up and RaaS contracts where the company has to hold the robot as an asset on their balance sheet. In a world of higher interest rates, financing working capital becomes more expensive.

- Revenue quality: Most robotics companies start off by selling hardware for one-time revenue, making it difficult to find opportunities for recurring revenue. These companies haven’t found a hardware and software combination that would result in better revenue. Furthermore, businesses that have a mix of recurring and one-time revenue are often more difficult for the investor community to assess since traditional SaaS KPIs may not be applicable.

- Employment: Robotic automation disrupts global employment. On average, the arrival of one industrial robot in a local labor market coincides with an employment drop of 5.6 workers. This will apply pressure to policy makers, so we’re asking companies to think of how regulation might impact their vision from day one.

Our guiding principles on how we will invest

We’re looking to back strong technical teams that have deep robotics engineering and supply chain expertise building autonomous robots that enable tasks which were previously impossible.This is our top criteria. Beyond this, we’re interested in startups that aspire to build businesses that can achieve the following:

- Disrupt Large markets: Targeting large Total Addressable Markets (TAMs) when calculated on a bottoms-up basis.

- Enable complex use cases: We’re not interested in labor arbitrage (low level) use cases; there’s already plenty of activity in these markets. Instead, we’re looking for use cases that at the very least protect human life. Ultimately, we want to move in the direction of enabling things that humans previously couldn’t physically or technically achieve (like our oil rig example above).

- Connected, cloud-based fleets: Remote telemetry for fleet learning is a particular feature we’re on the lookout for. Equipped with remote telemetry, robots can transmit data to other nearby robots on the fly, who can then take that data and learn from it. We’re also looking for companies that are actively thinking about autonomous manipulation.

- Recurring revenue and high average revenue per account (ARPAs): A portion of revenue must be recurring, and average selling price (ASP) must be six figures, with tons of room available to increase actual cash value (ACV) and ARPA. As a directional example, think more Intuitive Surgical and less Roomba. Even if penetration is low, these metrics would signal that the room to penetrate is much higher.

- Traction forward is a bonus:We’re looking for companies with robots in active use in production environments for a minimum of 6 months and avoiding firms where the sales have solely been to innovation groups.

The road ahead with autonomous robotics

The technology behind autonomous robotics is closer than ever to widespread deployment. From medical and warehouse use cases to construction and companionship, we expect to see autonomous robotics touch a diverse set of industries. Also on the horizon is teleoperation—robotics manufacturers and customers have the opportunity to remotely operate robots, and in some cases can assign the work to people in other parts of the world. ANYbotics, for instance, has been experimenting with teleoperation since 2019, when it announced one of its products could perform industrial inspection tasks, like checking energy plants for rust and leakages, via teleoperation. When Elon Musk shared a video of Tesla’s humanoid robot Optimus folding a shirt, he explained it was remotely operated. Phantom Auto, too, built a platform that enables factory workers to remotely operate forklifts. Teleoperation represents a meaningful intermediate step between today’s human-controlled, in-person robots, and fully autonomous robots. Even with all the data and use cases we currently have, we can’t make a definitive prediction about where autonomous robotics is headed. But with the right investments and the right resourcing, it’s not hard to imagine how further advancements will make a staggering number of “what if?” scenarios a reality. If you are building in the autonomous robotics space, we’d love to hear from you. Feel free to reach us on Twitter at @alexferrara, @bhavikvnagda, @nidmarti, and @MadelineShue.