The State of AI 2025

Three years after the AI Big Bang early galaxies are forming in the Cloud AI universe, with plenty of “dark matter” still swirling.

There is no cloud without AI anymore.

In this State of AI report, we aim to:

- Share our latest Benchmarks for what great AI startups look like

- Tour our Roadmaps across Infrastructure, Developer Tools, Horizontal AI, Vertical AI, and Consumer while highlighting the stable galaxies in each space—where constellations are forming

- Surface the dark matter—important areas with major questions unanswered

- Offer our five predictions for what we expect to see in the year or two ahead.

*Debates continue over the true AI Big Bang—some point to 2012’s AlexNet breakthrough in deep learning; others to OpenAI’s 2020 scaling laws. For this report, we consider the mass release of ChatGPT as the moment AI truly exploded into public consciousness.

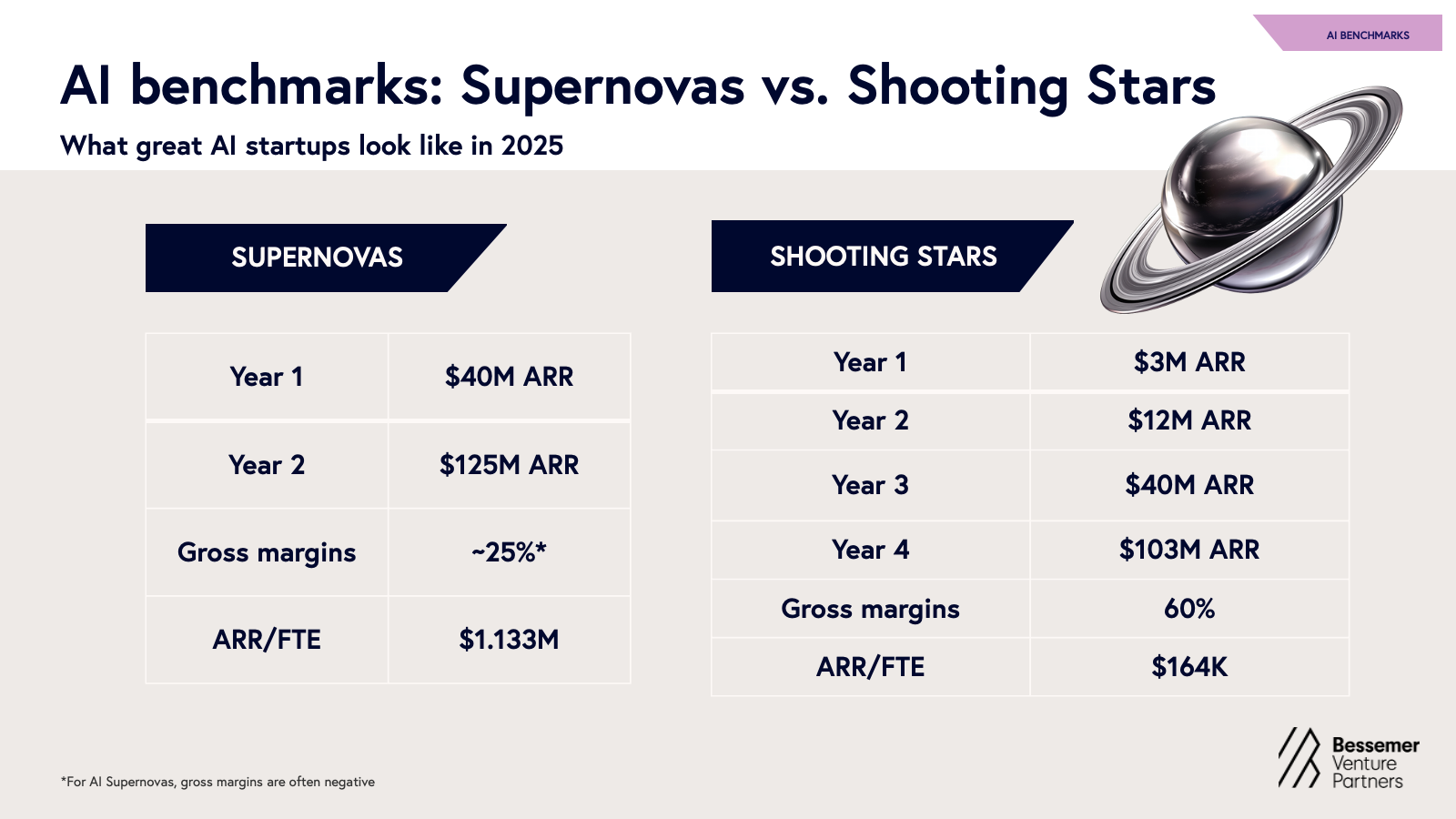

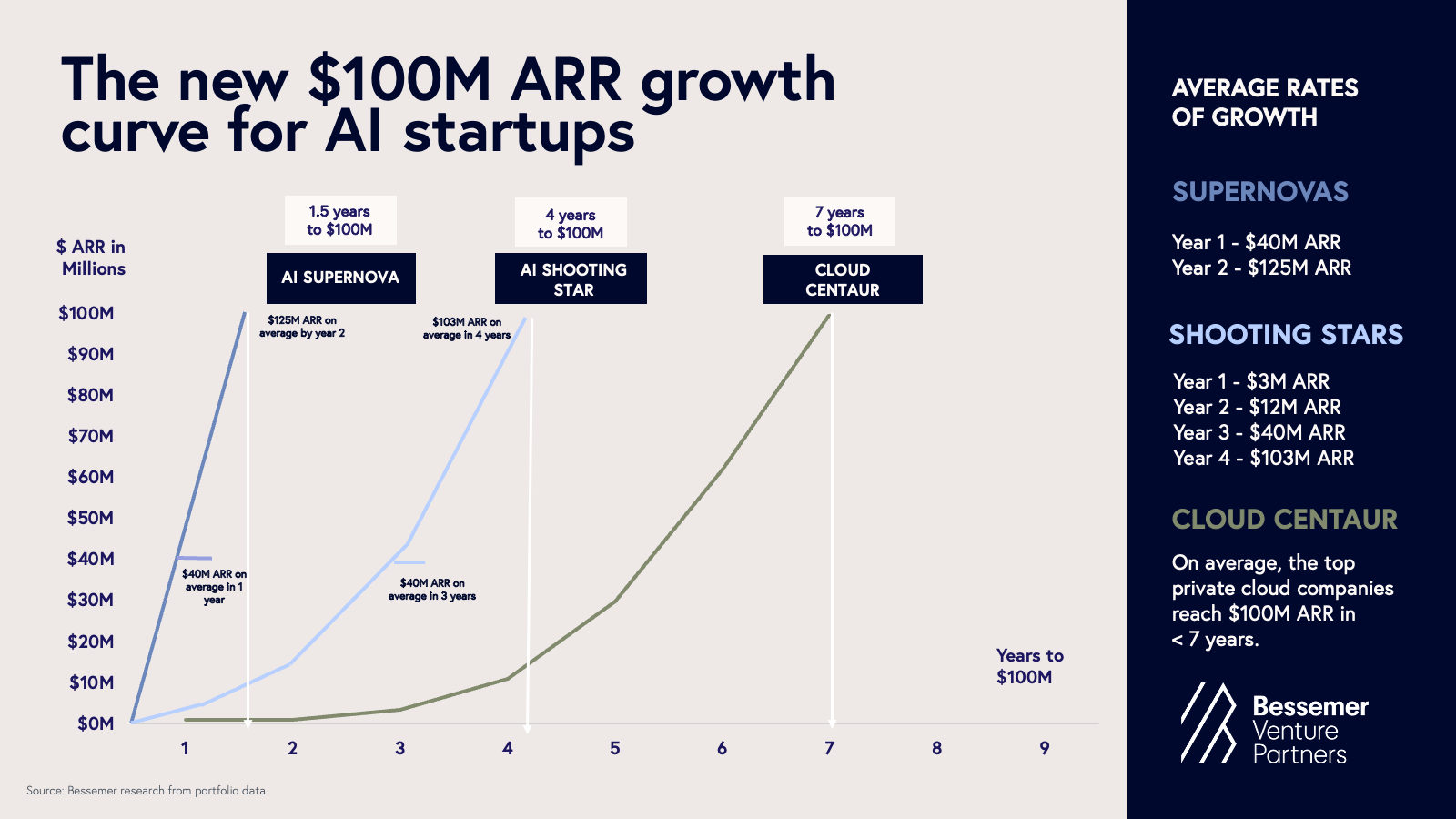

AI benchmarks: What “great” startups look like in 2025

Benchmarks have always been an imperfect way to judge startups—but in the AI era, they’re even less reliable. In particular, some AI startups have achieved growth rates the world has truly never seen, driving every AI founder to wonder what good even looks like anymore. We’ve thus updated our benchmarks acknowledging that some AI startups are playing a different game.

A tale of two AI startups and the new “T2D3”

To formulate our new set of benchmarks we studied 20 high-growth, durable AI startups across our portfolio and beyond, including breakouts Perplexity, Abridge, and Cursor.

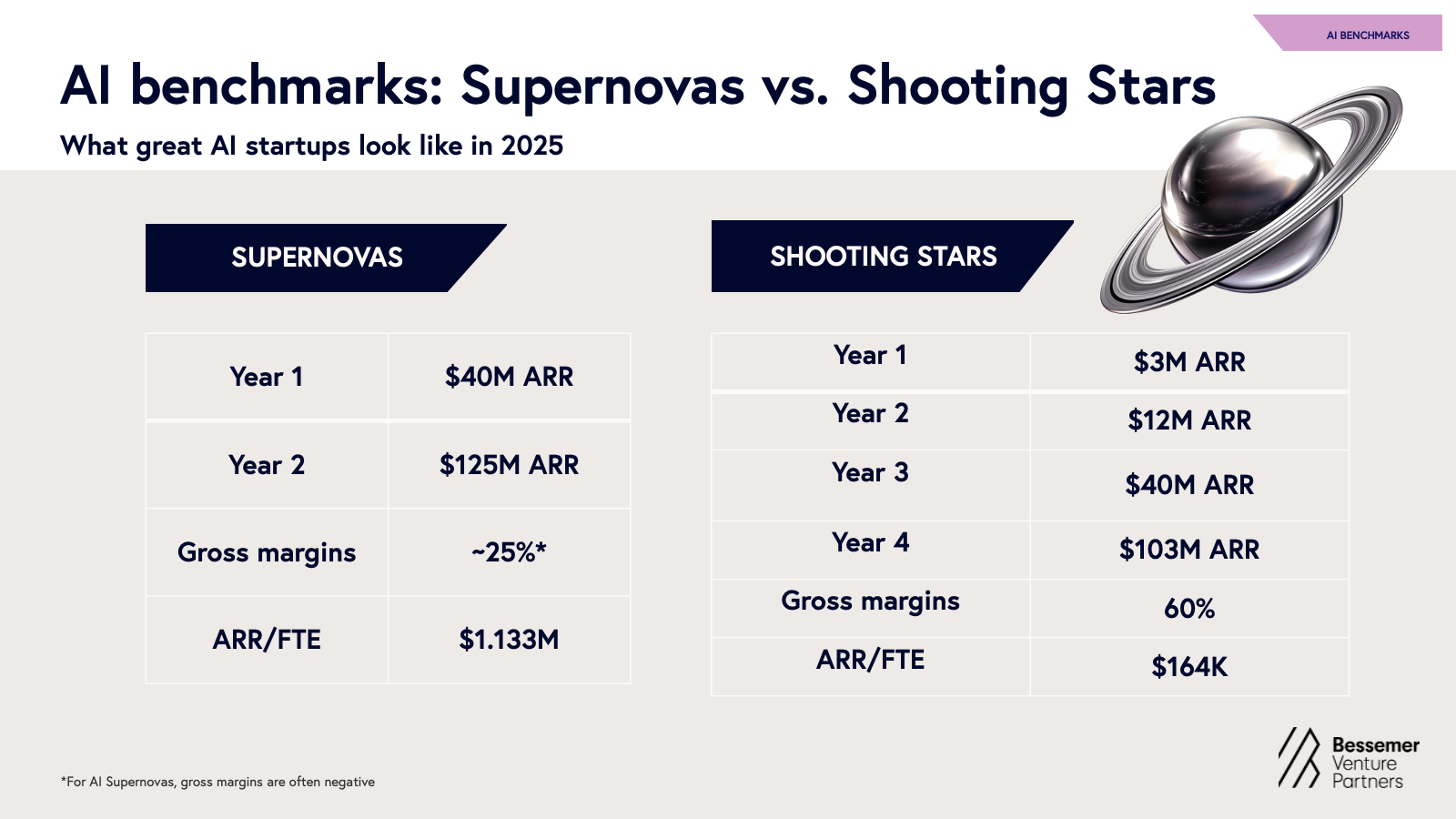

AI supernovas

Supernovas are the AI startups growing as fast as any in software history. These businesses sprint from seed to $100M of ARR in no time, often in their first year of commercialization. These are at once the most exciting and the most terrifying startups we see. Almost by definition, these numbers arise from circumstances where revenue may appear vulnerable. They involve fast adoption that either belies low switching costs, or signals massive novelty that may not align with long-term value. These applications are often so close to the functionality of core foundation models that “thin wrapper” labels could be thrown. And in red hot competitive spaces, margins are often stretched close to zero or even negative as startups use every tool to fight for winner-take-all prizes.

| Supernovas: Explosively scaling AI startups with unprecedented growth and adoption. | ||

| Average benchmarks | Year-1 ARR | Year-2 ARR |

| Annual Recurring Revenue | $40M | $125M |

| Average | |

| Gross Margin | ~25% (often negative) |

| Year-1 | |

| ARR/FTE (Average) | $1.13M |

AI Shooting Stars

Shooting Stars, by contrast, look more like stellar SaaS companies: they find product-market fit quickly, retain and expand customer relationships, and maintain strong gross margins—slightly lower than SaaS peers due to faster growth and modest model-related costs. They grow faster on average than their SaaS predecessors, but at rates that still feel anchored to traditional bottlenecks of scaling an organization. These businesses might not yet dominate headlines, but they’re beloved by their customers and are on the trajectory to making software history.

| Shooting Stars: Fast-growing, capital-efficient AI startups with strong PMF, solid margins, and loyal customers—scaling like stellar SaaS. | ||||

| Average benchmarks | Year-1 ARR | Year-2 ARR | Year-3 ARR | Year-4 ARR |

| Annual Recurring Revenue | ~$3M | ~$12M | ~$40M | ~$103M |

| Average | |

| Gross Margin | 60% |

| Year-1 | |

| ARR/FTE (Average) | ~$164K |

Key takeaway for AI founders on these new benchmarks: We share these admittedly freakish new benchmarks to showcase the reality of standout AI startups of the moment. That said, building an iconic AI company doesn’t require quadrupling overnight. Many of the strongest companies will still take a more deliberate path, shaped by product complexity and competitive dynamics.

However, speed matters more than ever. AI has unlocked faster product development, GTM, and distribution—making “Q2T3” (quadruple, quadruple, triple, triple, triple) an ambitious but increasingly achievable benchmark. Dozens of startups have already proven it’s possible—and we believe you can too!

*Admittedly we haven’t seen five years of data yet, so perhaps in years to come we’ll learn these companies won’t truly triple but Q2 T1 D2 isn’t nearly as catchy.

Roadmaps of the AI cosmos

In every roadmap that Bessemer tracks, we’ve seen many elements of the AI stack meaningfully crystallize in the past year, resulting in the formation of several early galaxies. We will survey those galaxies in each roadmap while noting the many areas of “dark matter” where we’re still guessing what the future holds.

I. AI infrastructure

Galaxies forming: Model layer

Let's start with the obvious: a handful of players such as OpenAI, Anthropic, Gemini, Llama and xAI continue to dominate the foundation model landscape, advancing model performance, while simultaneously exploring vertical integration. It’s clear now that big labs are moving beyond offering just foundation models and tooling for model development—these labs are now rolling out agents for coding, computer use, and MCP integrations. Meanwhile, compute costs continue to drop predictably—driven by software innovations, and end-to-end hardware optimization.

AI infrastructure’s Second Act

AI’s first era has been defined by major algorithmic breakthroughs—backpropagation, convolutional networks, transformers. The field is primarily advanced by algorithmic improvements and scaling methods. Accordingly, infrastructure has mirrored this mindset, fueling the rise of giants in areas such as foundation models, compute capacity, and data annotation.

- Reinforcement learning environments and task curation through platforms such as Fleet, Matrices, Mechanize, Kaizen, Vmax, and Veris, as human-generated labelled data is no longer enough to enable production-grade AI

- Novel evaluation and feedback frameworks such as Bigspin.ai, Kiln AI, and Judgment Labs, enabling continuous and specific feedback loops

- Compound AI systems that don’t just focus on raw model horsepower but combine components such as knowledge retrieval, memory, planning, and inference optimization

Dark matter: The bitter lesson of AI

Rich Sutton’s “Bitter Lesson” reminds us that the most effective advances in AI have historically come from harnessing computation and general-purpose learning, rather than relying on handcrafted features or human-designed heuristics. As AI infrastructure enters its next chapter, it remains an open question which techniques will prove most effective or scalable as practitioners seek to embed context, understanding, and domain expertise to ensure real-world utility.

II. Developer platforms and tooling

Galaxies forming: AI engineering an integral part of software development

Beyond the infrastructure stack, AI has clearly transformed software development. Natural language has become the new programming interface with models executing on the instructions. In this paradigm shift, the very principles of software development are changing as prompts are now programs with LLMs as a new type of computer.

Galaxies forming: Model Context Protocol (MCP)

A new layer of infrastructure will have profound implications for AI development—Model Context Protocol (MCP). Introduced by Anthropic in late 2024 and quickly adopted by OpenAI, Google DeepMind, and Microsoft, MCP is becoming the universal specification for agents to access external APIs, tools, and real-time data.

Dark matter: Memory, context and beyond

As AI-native workflows mature, memory is becoming a core product primitive. The ability to remember, adapt, and personalize across time is what elevates tools from useful to indispensable. Great AI systems are expanding past recall and evolving with the user. In 2025, large context windows and retrieval-augmented generation (RAG) have enabled more coherent single-session interactions, but truly persistent, cross-session memory remains an open challenge. While the foundational model companies are working on memory, so too are startups like mem0, Zep, SuperMemory, and LangMem by Langchain.

- Short-term memory via expanded context windows (128k to 1M+ tokens, depending on model and architecture)

- Long-term memory via vector DBs, memory OSes (e.g., MemOS), and MCP-style orchestration

- Semantic memory via hybrid RAG and emerging episodic modules, designed for context-rich recall

- Building flexible, memory-aware systems with low-latency recall

- Designing for implicit learning and deep integration with core workflows

- Turning context into a compounding advantage—across data, distribution, and delight

III. Horizontal and Enterprise AI

Galaxies forming: Systems of Record under pressure

In enterprise software, AI is beginning to expose opportunities for startups to disrupt some of the largest horizontal systems of record (SoR). For decades, SoRs like Salesforce, SAP, Oracle and ServiceNow held firm thanks to their deep product surfaces, implementation complexity, and centrality to business-critical data. The businesses enjoyed some of the strongest moats in software. The switching costs were just too high and very few startups even dared to try and unseat them. Now, those moats are degrading.

With AI’s ability to structure unstructured data and generate code on demand, migrating to a new system is faster, cheaper, and more feasible than ever. Agentic workflows are replacing rote data entry, and the typical implementation projects that required armies of systems integrators and years of work are being accelerated by orders of magnitude.

- AI Trojan horse features: Enable startups to tap into the flow of data with a valuable wedge tool that allows them to start capturing all of the data flowing into a system of record without ripping it out on day one

- Implementation: 90% faster with codegen tools and AI’s ability to translate business logic described in natural language into code

- Data: Auto-ingested, leveraging AI’s ability to translate between different schemas, enabling 1-day data migrations, making historical vendor lock-in nearly obsolete

- ROI: 10x vs. legacy, not just incremental; agentic workflows reduce professional services spend and accelerate time-to-value

Galaxies forming: Next generation CRM, HR, and Enterprise Search

The big question: Are AI-native challengers creating net-new categories—or are they finally threatening incumbents? In CRM, early signs of disruption are promising. These AI-native tools aren’t just replacing existing CRMs—they’re offering a new kind of experience altogether. They simultaneously offload a tremendous amount of manual work from sales teams which also provides sales managers with intelligent recommendations for where to spend their time based on auto-synthesized deal signals across all their channels. This is a 10x leap, not a 10% improvement.

- HR and recruiting: AI copilots for candidate screening, onboarding, and performance tracking

- Enterprise search: Horizontal copilots trained on internal knowledge are stepping into roles formerly occupied by SharePoint or Notion search

- FP&A: AI native FP&A tools are allowing financial analysts to centralize data from many different silos and run complex analysis on it without requiring the support of data engineering teams

Dark matter: Enterprise ERP and the long tail of systems of record (SoR)

Despite all this momentum, some of the biggest enterprise surfaces remain surprisingly under-disrupted:

- Enterprise scale ERP: While we are seeing a tremendous amount of exciting activity with AI-native accounting and ERP platforms, most of them are focused on SMB and mid-market customers today, largely in segments like software and services that are simpler than in industries that have highly complex manufacturing, supply chain and inventory requirements. That said, we think AI can offer tremendous value in these more complex settings, but it will take time for the new entrants to build up the breadth of product required to serve more complex customers like this, and we think the true enterprise ERP replacement cycle is still many years out.

- The long tail of SoR: While CRMs and ERPs get a lot of the attention when it comes to systems of record, there is a much longer tail of systems of record that also represent massive opportunities for disruption over time. These range from Identity Platforms in enterprise security to Computer Aided Dispatch systems in public safety to Content Management Systems in web design. We think all of these categories are ripe for disruption, but this is a decade-long journey and entrepreneurs are just starting to turn their attention to these categories.

IV. Vertical AI

Last year, we proposed a bold thesis: Vertical AI has the potential to eclipse even the most successful legacy vertical SaaS markets. Our conviction in that thesis is stronger than ever. Adoption continues to accelerate, particularly for vertical workflows that have long been manual, service-heavy, or seen as resistant to technology. This has reshaped our view of so-called “technophobic” verticals. In reality, the issue was never a lack of willingness to adopt new tools, it was that traditional SaaS failed to solve high-value vertical-specific tasks that were multi-modal or language heavy. Vertical AI is finally meeting these users where they are, with products that feel less like software and more like real leverage.

Galaxies forming: Vertical-specific workflow automation

Multiple industries, and surprisingly many of those considered technophobic in past eras, are showing clear signs of meaningful Vertical AI adoption. For example:

- Healthcare: Abridge automates clinical note-taking with generative AI, easing provider burnout while improving documentation quality. SmarterDx helps hospitals recover missed revenue by automating complex coding workflows. OpenEvidence automates medical literature review and delivers instant answers at point of care.

- Legal: EvenUp turns days of manual work into minutes by generating legal demand packages, allowing trial attorneys and personal injury firms to scale caseloads. Ivo helps legal teams automate contract review and perform natural language search across contracts in the business. Legora accelerates legal research, review, and drafting, while enabling collaboration throughout the workflow.

- Education: Companies such as Brisk Teaching and MagicSchool offer AI-powered tools for teachers to streamline tasks like grading, tutoring, and content creation.

- Real Estate: EliseAI automates previously labor-intensive, manual property management workflows, from prospect and resident communications to lease audits.

- Home Services: Hatch acts as AI-powered customer service representative (CSR) teams. Rilla analyzes in-person sales conversations using real-world audio, coaching reps at scale.

- Compelling wedge: Early winners start by solving a core pain point which is often language-heavy or multi-modal, and as a result, underserved by previous software waves. The best wedge-in products are intuitive and often embedded in existing workflows to make adoption seamless. Voice/audio appears over and over again as a common aspect of a miraculous wedge.

- Context is key: Defensibility stems from domain expertise: integrations, data moats, and multimodal interfaces built for vertical-specific needs. The strongest teams quickly move beyond fine-tuning and into deep, verticalized utility.

- Built for value: ROI is clear from day one and there’s no Excel spreadsheet needed to explain it to the user. These tools unlock 10x productivity, reallocate labor to higher-value work, reduce costs, or drive topline growth. The value is immediate, not a “nice to have.”

Dark matter: Open questions in Vertical AI

For all the momentum, there are still real unknowns in Vertical AI in three key areas:

- Interaction with legacy systems of record: Will the next generation of Vertical AI companies continue to integrate with and extend the utility of existing systems of record (the status quo today) or begin to compete with them directly? Could we see a future where these legacy systems of record are no longer central at all, and are instead replaced by AI-native, vertical-specific systems of action?

- Competition from incumbents: In verticals where entrenched incumbents are not sleeping at the wheel, will scale and distribution win out over upstart innovation, or will a new generation of companies break through despite the odds?

- Sustainable data moats: As Vertical AI companies expand their scope, can they maintain meaningful data advantages in industries where data is fragmented, privacy-sensitive, and often difficult to access or standardize at scale?

V. Consumer AI

As the underlying technology evolves, so do opportunities to tap into new consumer needs. Last year, most consumer usage leaned toward productivity-driven tasks, such as writing, editing and searching, as consumers explored the novelty and utility of AI. But we’re starting to see a shift toward deeper use cases, including therapy, companionship, and self-growth. AI is no longer just a tool for task assistance, it’s poking into more meaningful areas of consumers' lives.

Galaxies forming: AI assistants for everyday tasks and creation

Consumers across age groups are increasingly turning to general-purpose LLMs, particularly ChatGPT and Gemini, for daily or weekly assistance (with an estimated 600M and 400M weekly active users as of March 2025, respectively.) What began as a novelty has become a habit, with these tools now serving hundreds of millions of users each week. Even as a long tail of specialized apps emerges, most consumers continue to rely on these general assistants for a wide range of needs, including research, planning, advice, and conversation.

Over the last year, voice emerged as a powerful modality for how consumers interact with these applications. Unlike legacy assistants like Alexa or Siri, LLM-powered voice AI can handle open-ended questions, facilitate reflection, and support more fluid, conversational exchanges, providing an intuitive, hands-free way to interact with technology. Platforms like Vapi in the Voice AI space are helping power consumers’ abilities to interact with machines in a way that spans language, context, and emotion.

Perhaps one of the most meaningful shifts is in how consumers search for information and interact with the web altogether. In this evolving landscape, Perplexity has emerged as a breakout darling. Its model-agnostic orchestration and blazing-fast UX have made it a go-to for AI-native search. With the launch of Comet, Perplexity’s agentic browser, the company is pushing the frontier further, and it may well become the defining form factor for the next generation of agents that are ambient and proactive.

Beyond its emerging role as a superior assistant, AI is also lowering the barrier to creation turning every consumer into a potential creator. Consumers are building apps with tools like Create.xyz, Bolt, and Lovable, generating music with Suno and Udio, producing multimedia with platforms such as Moonvalley, Runway, and Black Forest Labs, and accelerating ideation and iteration with tools like FLORA, Visual Electric, ComfyUI and Krea. AI is transforming everyday consumers into creators, pushing the boundaries of what we once thought possible.

Galaxies forming: Purpose-built AI assistants

As consumers look to integrate AI more deeply into their daily lives, a wave of consumer applications has emerged to address specific needs. One of the fastest-growing areas is mental health and emotional wellness. While “ChatGPT therapy” continues to gain traction, we’re also seeing the rise of purpose-built tools centered on self-reflection and personal growth. This includes AI journals and mentors like Rosebud and gamified self-care companions like Finch, which help users set personal goals, build healthy habits, and track emotional well-being. Character.AI was an early signal of consumer appetite for emotionally expressive AI, but over the past year, that demand has gone mainstream, with LLM-powered tools increasingly designed to support long-term memory, emotional resilience, and self-development.

Dark matter: Clear unsolved consumer pain points

Some of the most obvious consumer use cases remain underserved not due to lack of demand, but because they still require too much manual action on the user’s part. While early agentic products are emerging, the underlying technology is still maturing.

- Travel: Travel booking remains fragmented and time-consuming. The opportunity for a personalized, end-to-end travel concierge is enormous, but still unclaimed.

- Shopping: There is an opportunity for e-commerce to be fundamentally reshaped when the starting point is no longer Google but agents that handle browsing, price comparison, and even checkout on the consumer’s behalf.

Bessemer's top AI predictions for 2025

1. The browser will emerge as a dominant interface for agentic AI

As agentic AI evolves, the browser is emerging as a potential environment for autonomous execution—not just a tool for navigation, but a programmable interface to the entire digital world.

2. 2026 will be the year of generative video

2024 marked the mainstream inflection point for generative image models. 2025 saw a similar breakout in voice, driven by improvements in latency, awareness, human-likeness, and customization, coupled with massive cost reductions. 2026 is shaping up to be the year video crosses the chasm. Model quality—across Google’s Veo 3, Kling, OpenAI’s Sora, Moonvalley’s Marey, and emerging open-source stacks—is accelerating. We're nearing a tipping point in controllability, accessibility, and realism, that will make generative video commercially viable at scale.

We also expect the next 12 months to clarify the market structure for generative video:

- Do large labs win it all? Models like Google’s Veo 3 are setting the benchmark for video realism and control. Higgsfield is making waves by building differentiated applications using in-context learning on top of existing frontier models—showing that you don’t necessarily need to train your own model to build a powerful product.

- Will open-source catch up? Unlike image generation, where open models have outperformed, video has had fewer open source leaders. Video models are compute and data intensive, costly to train and complex to evaluate. That said, we predict strong open video models will emerge in 2026—Qwen’s open video model is an early winner, and momentum is building.

- Are there advantages for real-time or low-latency use cases? We’re watching early teams like Lemonslice experiment with streaming video and real-time inference, where speed and responsiveness can become product moats in themselves.

- Cinematic video: tools for creators, studios, and marketing teams, as with Moonvalley

- Real-time, low-latency generation: livestreaming, virtual influencers, gaming

- Extreme realism: photorealistic storytelling, virtual production

- Personalized content and social identity

- Developer workflows that make it easier to create video applications and outputs

3. Evals and data lineage will become a critical catalyst for AI product development

One of the biggest unsolved bottlenecks in enterprise AI deployment is evaluation. How is the product, feature, algorithm change “doing”? Do people like it? Is it increasing revenue / conversion / retention? Most every company still struggles to assess whether a model performs reliably in their specific, real-world use cases. Public benchmarks like MMLU, GSM8K, or HumanEval offer coarse-grained signals at best—and often fail to reflect the nuance of real-world workflows, compliance constraints, or decision-critical contexts.

- Private, use-case-specific evals built on proprietary data

- Business-grounded metrics like accuracy, latency, hallucination rates, customer satisfaction

- Continuous eval pipelines tightly integrated into production systems and feedback loops

- Lineage and interpretability, especially in regulated verticals like healthcare, finance, and insurance

- Tooling for multi-metric evals (e.g., accuracy and hallucination risk and compliance)

- Synthetic eval environments for stress testing agents

- Interoperability with logging, retrieval, and feedback systems

- Support for model drift and continuous updates over time

4. A new AI-native social media giant could emerge

Major shifts in consumer technology have historically paved the way for new social giants. PHP enabled Facebook. Mobile cameras made Instagram possible. Advances in mobile video propelled TikTok. It’s hard to imagine that the new capabilities enabled by generative AI won’t lead to a similar breakout.

5. The incumbents strike back as AI M&A heats up

After two years of rapid disruption by AI-native startups, the enterprise giants are striking back—not by rebuilding from scratch, but by acquiring the capabilities they need to catch up. In 2025 and 2026, we expect to see a surge in M&A activity as incumbents move aggressively to buy their way into the AI era.

- Be ready for strategic interest: If you're building a domain-specific or infrastructure-layer AI product, expect inbound from legacy players looking to fill gaps.

- Play for leverage: The best-positioned startups will have strong technical moats, customer traction, and embedded workflows that make them hard to replicate.

- Know your acquirer’s roadmap: Understand where incumbents are falling behind in your space. If you can deliver what they can’t build fast enough, you’re valuable.

The founder’s edge in the AI cosmos

We’re no longer at the dawn of AI—we’re deep in its unfolding galaxies. Today’s top startups aren’t just building faster software. They’re designing systems that see, listen, reason, and act—embedding intelligence into the fabric of work and life.

Here are the top takeaways for AI application founders

- Two AI startup archetypes are winning: On average, Supernovas hit ~$100M ARR in 1.5 years—but often with fragile retention and thin margins; Shooting Stars grow like stellar SaaS: $3M to $100M over 4 years, with strong PMF and healthy margins.

- Memory and context are the new moats: The most defensible products will remember, adapt, and personalize. Persistent memory and semantic understanding create emotional and functional lock-in.

- Systems of action are replacing systems of record: AI-native apps don’t just store data—they act on it. Don’t bolt AI onto legacy software—reimagine the entire workflow.

- Start with an AI wedge: Solve a narrow, high-friction problem (e.g., legal research, sales notes). Deliver 10x value fast—then expand.

- The browser is your canvas: Agentic AI is shifting to the browser layer—now a programmable environment where agents observe and execute. Build for this surface; it’s the new operating layer.

- Private, continuous evaluation is mission-critical: Public benchmarks aren’t enough. Enterprises demand trusted, explainable performance. Build in eval infrastructure from day one.

- Speed of implementation is a strategic advantage: Onboarding that once took months now takes hours. Codegen, auto-mapping, and natural language interfaces collapse vendor lock-in.

- Vertical AI is the new SaaS: "Technophobic" industries are adopting AI fast. Win by embedding deeply, proving ROI from day one, and scaling quickly.

- Incumbents are awake—and acquisitive: SaaS giants are buying their way into AI. Build technical and data moats. Be M&A-ready, but operate like you’ll own the category.

- Taste and judgment are your differentiators: In a world of agents and automation, human insight is the edge. Founders who intuit what should exist—not just what can—will define the next era.