Perspective: Abstracting away the complexity of data engineering

The modern cloud stack is increasingly complex, but emerging companies make it easier for data teams to transport, work with, and leverage the benefits of data.

As we explained in our Data Infrastructure Roadmap, data continues to be generated in a disparate way with various business users wanting to make use of siloed data. Most companies are shifting towards centralizing their data in a repository in a data warehouse, data lake, or lakehouse architecture. However, there is an increased need to move that data and then work with it from source to store to ultimate endpoint as data becomes more important to enterprises of all sizes.

Data models are being run with greater frequency, making the ability to move, access, and work with data in a speedy manner more and more important. Despite a centralized repository for data, the way it moves is not always linear in nature, resulting in a complex, interwoven data architecture where tasks are becoming interdependent on each other. These complex and difficult integrations are developed by engineers who data scientists rely on to maintain the pipelines and connectors to manage how data flows. In most scenarios, these are barely self-serve and take time, coordination, and an investment of engineering resources to properly build.

Despite the growing awareness of how important data is to their businesses, there is still significant friction in organizations to leverage data for their needs. Data scientists and business users can often wait days to weeks as overburdened data engineers have to build the right pipelines to be able to operationalize their data. Even when they are developed, they can often break or turn out to be less scalable than initially thought. We are excited about products that help to streamline data workflows, enable real-time data integrations, and establish a coordination layer to deliver data to the tools used by employees in various functional departments. This article will elaborate on the products helping to abstract away complexity from data engineering problems.

An overview of the emerging categories that make data engineering less complex

ELT Solutions

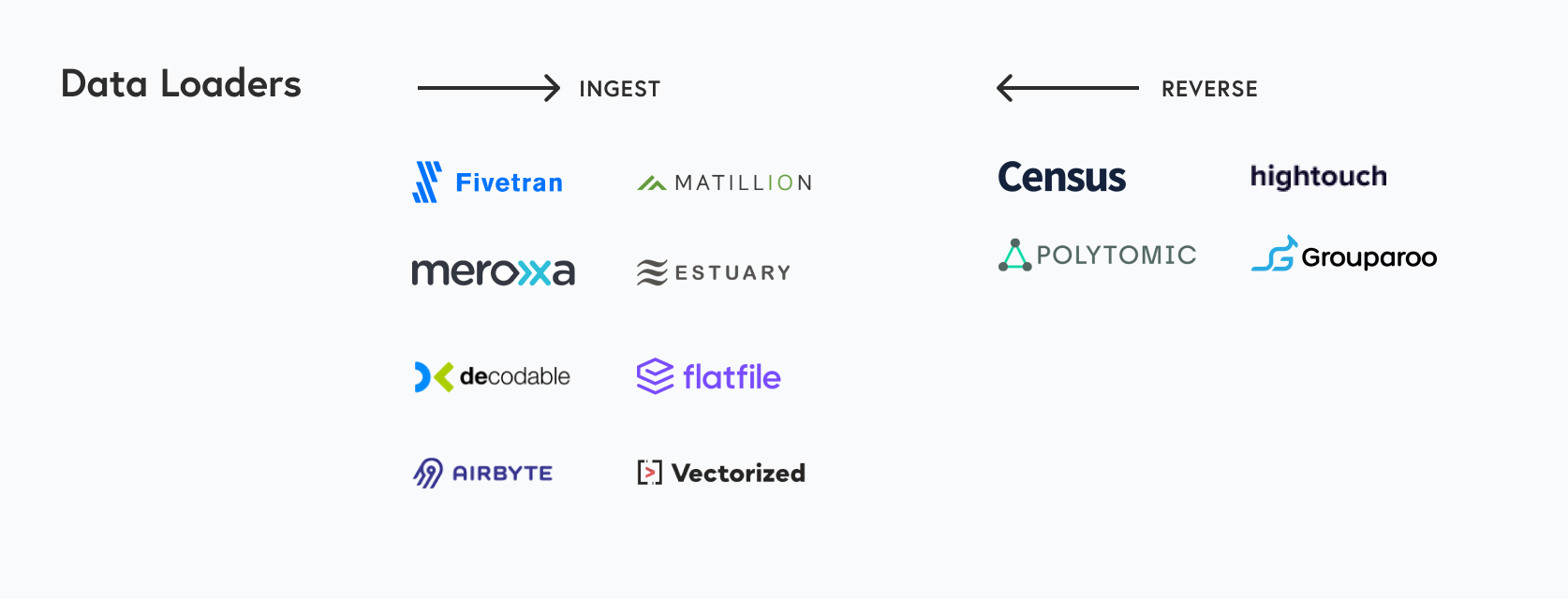

There are a number of categories of data loading products that perform different functions we will outline here. Perhaps the most notable one is called ELT (Extract, Load, Transform) which takes data from a source such as a SaaS app and moves it into a data store like Snowflake. While historically, data teams would have to rely on engineers to build and manage these pipelines, companies such as Fivetran offer a managed service that reliably and regularly pushes data from place to place in a usage-based pricing model. With the click of a few buttons, Fivetran empowers data analysts to take their data from sources such as Postgres, Salesforce, and Amplitude to join and query against in Snowflake. These data consumers no longer have to wait for an engineer to set up a pipeline but can stand up dataflows independently and have confidence over their persistence. Airbyte is an open-source version of Fivetran which enables companies to self-host their own connectors should they want to; plus, they provide the value of a strong open-source community behind their product, developing connectors to all sorts of long-tail applications and sources that their closed-source counterparts may not be able too.

Reverse ETL

Another category serves a similar function to the ELT space, rather in the opposite direction. Products in what is currently being called “Reverse ETL” allow data to be pushed from the cloud data warehouses back to an endpoint. Companies such as Hightouch and Census realized data is now centralized and modeled through products such as dbt, but applications of that data are isolated to analytics and do not touch operations. For this reason, getting data out of the warehouse and back into a business application is important. For example, Hightouch enables engineers to set up pipelines which send product usage data from Snowflake back to Salesforce or Hubspot to initiate a specific outreach campaign. Hightouch also has products such as a visual audience builder which allows business users to do this type of work through their UI directly, operationalizing analytics for typically less technical users to take advantage of data without needing to write any SQL. The products mentioned above, and the majority of data flows built in-house today, work in a regular cadence sending data in “batch”.

As software enables the world to move faster, being able to respond to events almost instantaneously is becoming business critical. There is a whole set of companies moving data in either an event-driven or real-time manner, allowing features and responses to events happening on website or in app instantly. The biggest success story in the real-time data space is Confluent, founded by the creators of the open-source project Apache Kafka. While first just offering a managed version of Kafka, they have started to expand into solutions that not only host Kafka for smaller companies such as a Confluent Cloud but also enable data teams to do more sophisticated processing of their data streams with other external projects such as Flink and Spark. We are firm believers Confluent — despite creating the category — is not the only option out there for users. Companies such as Vectorized remove the friction in managing ZooKeeper for orchestrating Kafka streams while Meroxa enables smaller companies to set up Debezium clusters that capture changes within data to trigger a workflow. Imply is building on top of Apache Druid to deliver the foundational analytics platform that enables organizations to gain operational and business intelligence from this new world of streaming/data in motion (Kafka/Kinesis).

Workflow Orchestrators

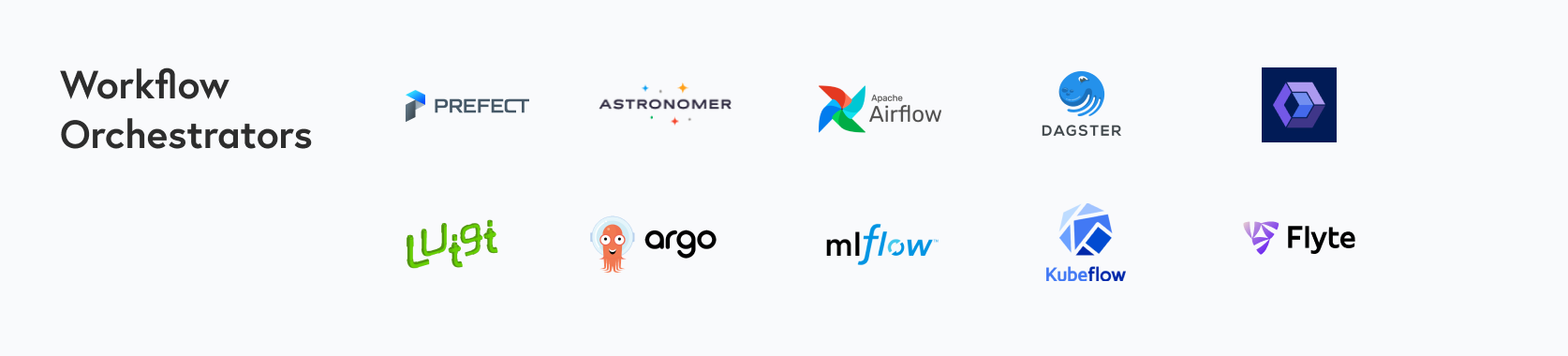

The last category of products we will discuss is colloquially known as “workflow orchestrators.” As cloud architecture diagrams become more and more complicated, larger tech companies were the first to realize they needed reliable ways to move data and execute tasks in a systematic fashion. There are a number of products for developers to integrate and deploy code continuously, but none are well-suited to the needs of data teams with less expertise in deploying and maintaining in cloud environments.

Companies such as Airbnb and Spotify developed and open-sourced their own offerings to specify all tasks and how they depend on each other, typically in what is known as a DAG (Directed Acyclic Graph). Products built by these companies, such as Airflow and Luigi, have been adopted by less technically sophisticated enterprises and smaller startups in hopes of leveraging those same capabilities to improve their products. Airflow has far and away garnered the most adoption since it’s more useful for data workflows and has a strong open-source community and integration suite.

Case Study: Building the new standard in dataflow automation

While Airflow has been a huge benefit to the data ecosystem, it suffers from serious flaws and issues that are of no surprise to anyone who has been a user. These flaws include brittleness, lack of support, and issues with deployment. Although Airflow now has a strong commercial entity in Astronomer, most instances are still self-hosted and break often, which causes data scientists to troubleshoot for hours or days. There are efforts to resolve these issues from the community but the project does have some technical limitations as a result of its origins.

With the evolution of today’s modern data stack, it is clear that engineers need a new way to orchestrate their workflows. A great example of a company revolutionizing the way data scientists and data engineers operate includes Bessemer portfolio company Prefect. Prefect, often referred to as “the new standard in dataflow automation,” is an open-core workflow orchestration system designed to run a number of tasks in parallel.

Founder and CEO Jeremiah Lowin observed some of the issues with Airflow as a major open-source contributor to the project; he then started Prefect to address the most pressing problems including task concurrency, infrastructure management, and workflow monitoring. Prefect supports a strong open-source model but also has a cloud offering which leverages what they call their “hybrid model” which only uses metadata to orchestrate workflows. This provides users with all the benefits of data security while leveraging the power of the cloud.

Working toward frictionless data engineering

Products like Fivetran, Hightouch, Meroxa, and Prefect all remove friction in the daily workflow of data teams. No longer do they have to worry about spending precious time building pipelines or wait into the night for someone else to fix a broken DAG. More and more of their time can be spent on the meaty, interesting work of creating data models and enabling their use across an enterprise. As the complexity of developing and managing these workflows goes down, individual data consumers will become more productive and the companies who use them have a leg up against their competition.

We mentioned just a few products we are excited about but know there are many we did not highlight or are waiting to be developed by the right team. If you are working on a product removing complexity from data engineering problems please reach out to us at datainfra@bvp.com.

Thanks to Jeremiah Lowin, Kashish Gupta, and DeVaris Brown for their input on this article.